Data Compression with Singular Value Decomposition (SVD)#

Singular Value Decomposition of a Matrix#

In Data Analysis, it is often required to compress the data, either to make it more manageable or to be able to visualize the most important features, reducing redundancy. The two tasks, usually named data compression and dimensionality reduction, are mathematically equivalent and closely related to the concept of Singular Value Decomposition (SVD) of a matrix.

Recall that:

An invertible matrix \(A \in \mathbb{R}^{n \times n}\) is said to be orthogonal if \(A^T A = I\) or, equivalently, \(A^T = A^{-1}\).

Now, consider a given matrix \(A \in \mathbb{R}^{m \times n}\). It is known that it can be factorized into the product of three matrices,

where \(U \in \mathbb{R}^{m \times m}\) and \(V \in \mathbb{R}^{n \times n}\) are orthogonal matrices, while \(\Sigma \in \mathbb{R}^{m \times n}\) is a rectangular matrix which is non-zero only on the diagonal. Such decomposition is named Singular Value Decomposition (SVD) of \(A\).

Of particular interest in our analysis are the values on the diagonal of \(\Sigma\), named singular values of \(A\), and usually denoted as \(\sigma_1, \dots, \sigma_{\min \{ m, n \}}\). In particular, it is known that the singular values:

are always greater or equal to 0, i.e. \(\sigma_i \geq 0\), \(\forall i\);

are ordered in descending order, i.e. \(\sigma_1 \geq \sigma_2 \geq \dots \geq 0\);

can be used to determine the rank of \(A\), since it is equal to the number of singular values strictly greater than zero, i.e. if \(\sigma_1 \geq \sigma_2 \geq \dots \sigma_r > 0\) and \(\sigma_{r+1} = 0\) for some index \(r\), then \(r = rk(A)\).

A useful property of the SVD of \(A\) is that it can be used to compress the informations contained in \(A\) itself. Indeed, note that the SVD decomposition allows to rewrite \(A\) as the sum of simple matrices, i.e.

where \(u_i\) and \(v_i\) are the columns of \(U\) and \(V\), respectively. Each term \(u_i v_i^T\) is a rank-1 matrix named dyad, and the \(i\)-th singular value \(\sigma_i\) represents the importance of the \(i\)-th dyad in the construction of \(A\). In particular, the SVD decomposition allows to decompose \(A\) into the sum of matrices with decreasing information content.

The SVD decomposition can be used to compress the matrix \(A\) by considering its \(k\)-rank approximation \(A_k\), defined as

It has been already showed that the \(k\)-rank approximation of \(A\) is the \(k\)-rank matrix that minimizes the distance (expressed in Frobenius norm) from \(A\), i.e.

SVD of a Matrix in Python#

The functions required to compute SVD decomposition of a matrix in Python are contained in the numpy package. In the following, we will consider the example matrix reported in the code snippet below.

# Importing numpy

import numpy as np

# Consider an example matrix

A = np.array(

[

[-1, -2, 0, 1, -2, -3],

[-1, -2, -3, -2, 0, -3],

[-1, -3, 1, 3, 2, -4],

[2, 1, -1, 0, -2, 3],

[0, -3, -1, 2, -1, -3],

[1, -3, 2, 6, 0, -2],

[-3, 1, 0, -4, 2, -2],

[-2, 2, -2, -6, -2, 0],

[-3, -1, 2, 0, 2, -4],

[2, -2, 0, 4, -1, 0],

]

)

# Measure the shape of A: which is the maximum rank?

m, n = A.shape

print(f"The shape of A is: {(m, n)}.")

# Compute the SVD decomposition of A and check the shapes

U, s, VT = np.linalg.svd(A, full_matrices=True)

print(U.shape, s.shape, VT.shape)

# Define the full matrix S

S = np.zeros((m, n))

S[:n, :n] = np.diag(s)

The shape of A is: (10, 6).

(10, 10) (6,) (6, 6)

Here we computed the SVD of A through the function np.linalg.svd(), that takes as input a matrix and returns a triplet U, s, VT, representing the matrices \(U\), \(V^T\) and a vectorized version of \(\Sigma\) that only contains the diagonal (to save memory!).

Note that, in some situations, it can be useful to compute the full matrix \(\Sigma\), as we did at the bottom of the code snippet above.

Warning

If the full matrix \(\Sigma\) can be avoided, do not construct it explicitly.

Exercise: Verify that the above algorithm works as expected, by proving that \( A \approx U \Sigma V^T \iff || A - U \Sigma V^T ||_2 \approx 0.\)

The numerical rank of \(A\)#

Exercise: Compute the rank of \(A\) by using the formula: \( rk(A) = r \text{ s.t. } \sigma_r > 0, \sigma_{r+1} = 0\), and compare it with the output of the built-in function in

numpy.

The \(k\)-rank approximation#

Exercise: Compute the \(k\)-rank approximation \(A_k\) of \(A\). Specifically, write a Python function that takes as input an integer \(k \leq \min \{ m, n \}\) and computes the \(k\)-rank approximation \(A_k = \sum_{i=1}^k \sigma_i u_i v_i^T\). Then, test it on a matrix of your preference and compute the approximation error in Frobenius norm, \(\| A - A_k \|_F\).

SVD for Image Compression#

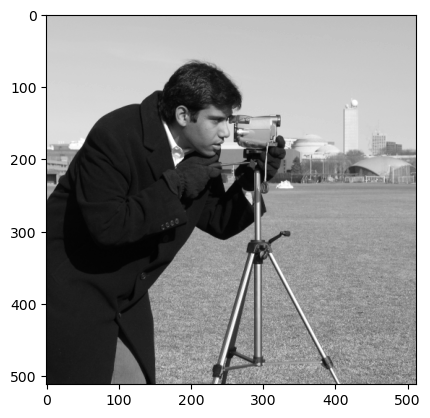

From a computational point of view, a grey-scale image is a matrix with shape (height, width), such that the element in position \(i, j\) contains the intensity of the pixel in the corresponding position. An RGB image is a triplet of matrices such that in position \(i, j\), each of the three matrices represents the amount of Red, Green and Blue in the corresponding pixel.

To work with images, we consider the skimage.data submodule:

import skimage

# Loading the "cameraman" image

x = skimage.data.camera()

# Printing its shape

print(f"Shape of the image: {x.shape}.")

Shape of the image: (512, 512).

To visualize a matrix as an image, it can be used the plt.imshow() function from the matplotlib.pyplot module. If the image is a grey-scale image (as it is the case for the camera image we are considering), it is required to set the cmap='gray' option to visualize it as a grey-scale image.

# Visualize the image

import matplotlib.pyplot as plt

plt.imshow(x, cmap="gray")

plt.show()

Besides the visualization, the image is still a matrix and all the techniques developed for matrices can be used on images. In particular, the \(k\)-rank approximation, by truncating the least important informations of the image, can be used to compress it with minimal information loss.

We recall that the \(k\)-rank approximation \(X_k\) of the image \(X\) is defined as

where each \(\sigma_i\) is a scalar number, \(u_i\) is an \(m\)-dimensional vector, while \(v_i\) is an \(n\)-dimensional vector. As a consequence, the number of values required to memorize \(X_k\) is \(k(m + n + 1)\), while the number of values required to memorize the whole image \(X\) is \(mn\). As a consequence, the compression factor (i.e. the percentage of pixels we saved in memorizing \(X_k\) instead of \(X\)) can be computed as:

Exercise: Using the function defined in the previous exercise, compute the \(k\)-rank approximation of the cameraman image \(X\) for different values of \(k\) and observe the behavior of each reconstruction. Also, compute and plot the compression factor \(c_k\) for each value of \(k\).